Octopus: a modular haptic feedback interface

Written on September 26th, 2025 by SS

The project explores the design and development of an open-ended, modular hardware/software interface named “Octopus” to expand research in multi-modal data exploration. The system is designed for integration with the “Dark Sonification” framework [1] developed by PhD researcher Miguel Angel Crozzoli, an interactive data display system for exploratory data analysis through affect [2], and focuses on applying data physicalization techniques through haptic feedback. “Octopus” is an open-source, customizable device that provides an embodied interface for data navigation and interaction. By bridging the gap between flexible software and reconfigurable hardware, the project contributes to the emerging field of Data-Human Interaction and addresses the societal need for accessible and inclusive scientific tools. The interface’s design emphasizes versatility, enabling researchers to rapidly prototype new ideas and integrate novel sensory modalities for enhanced data understanding.

The project is inspired by the sensory system of the octopus whose tentacles are capable of different sensory modalities including touch, taste, smell and chemical analysis.

The “Octopus” interface

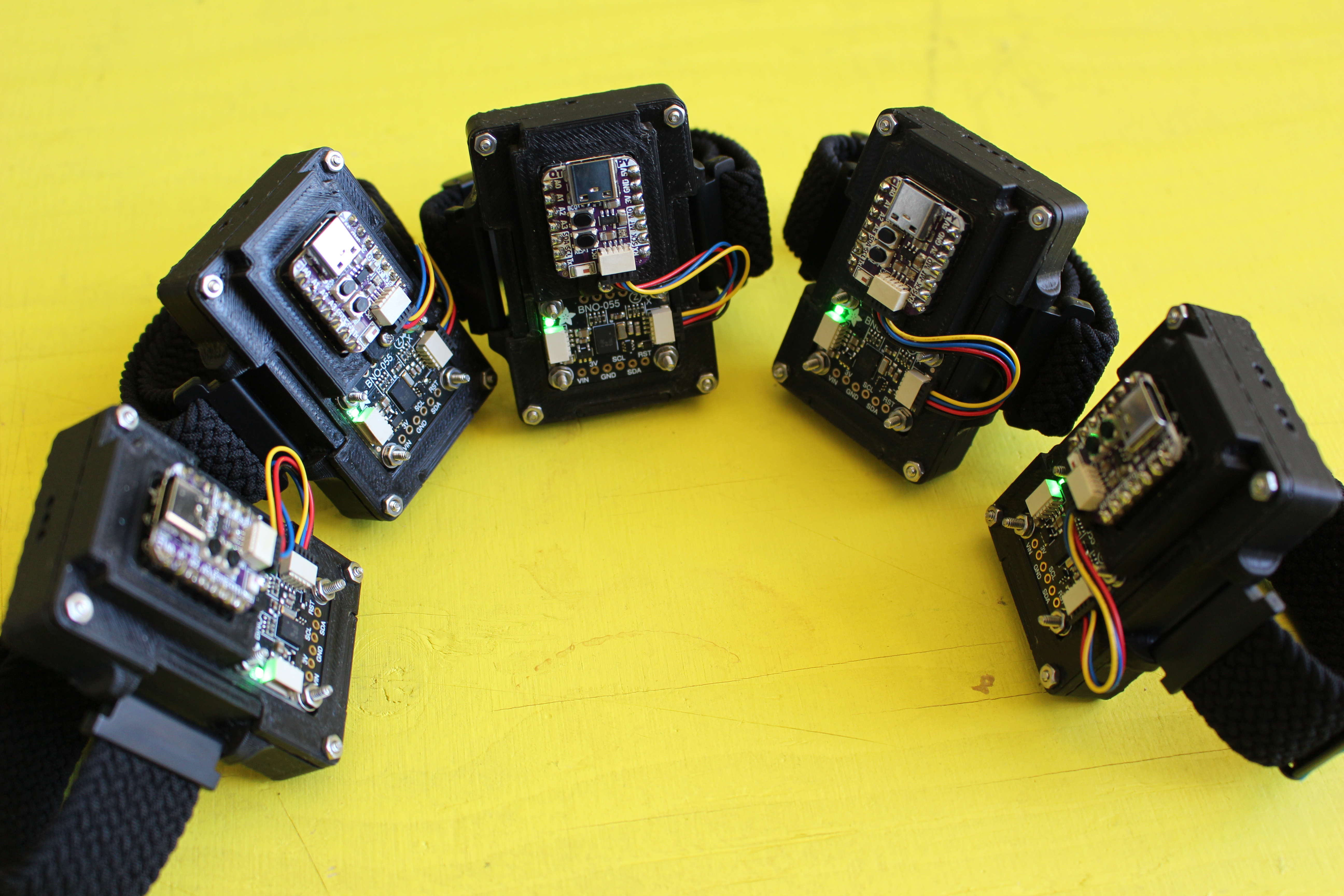

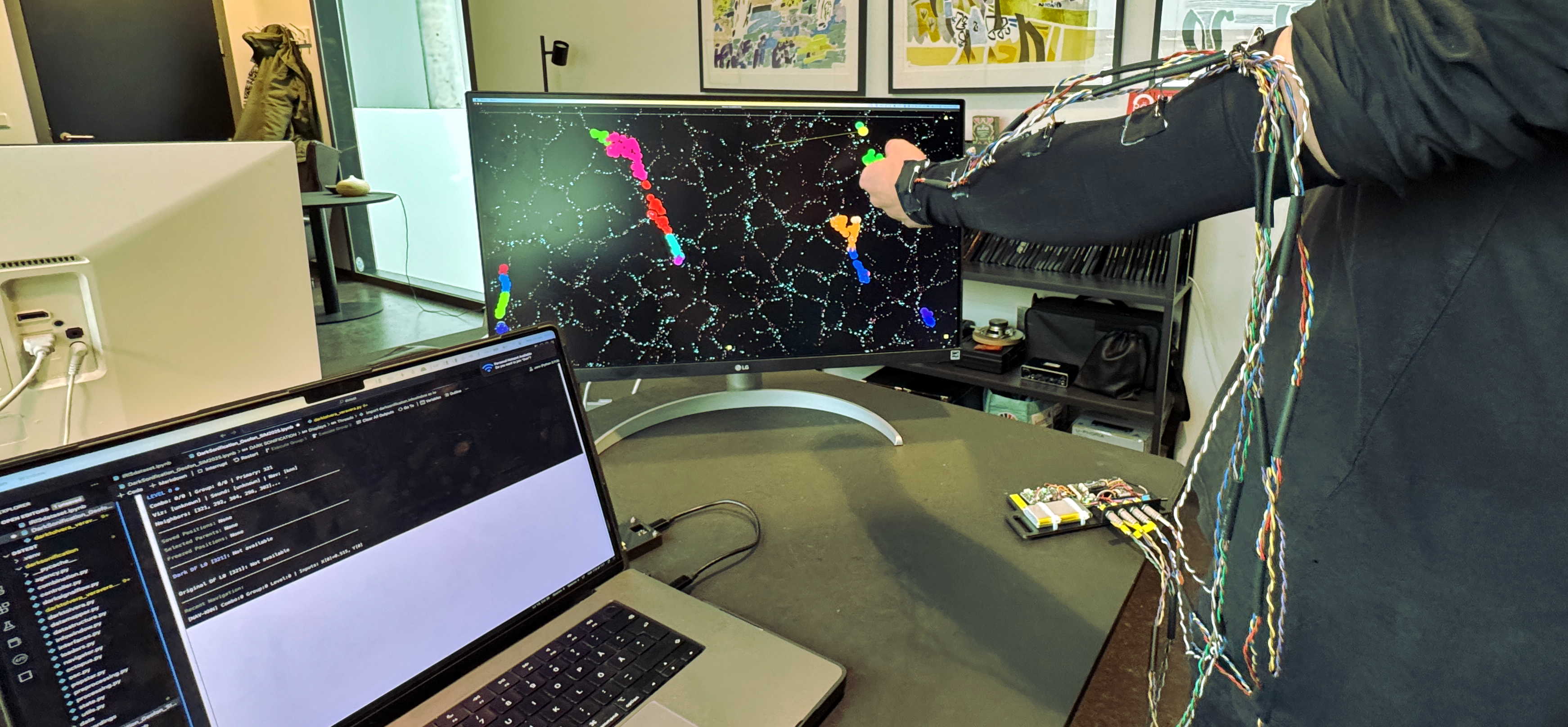

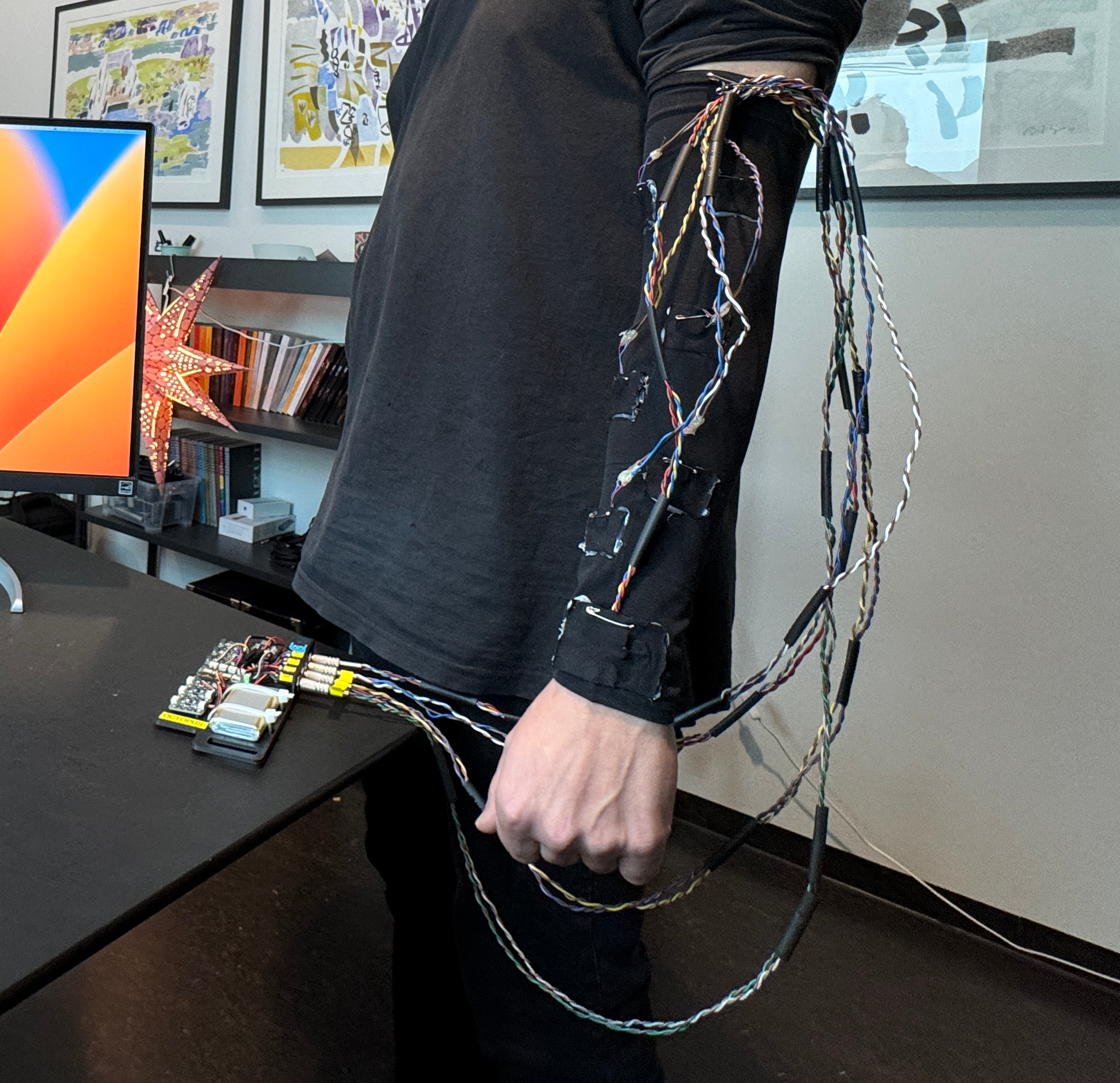

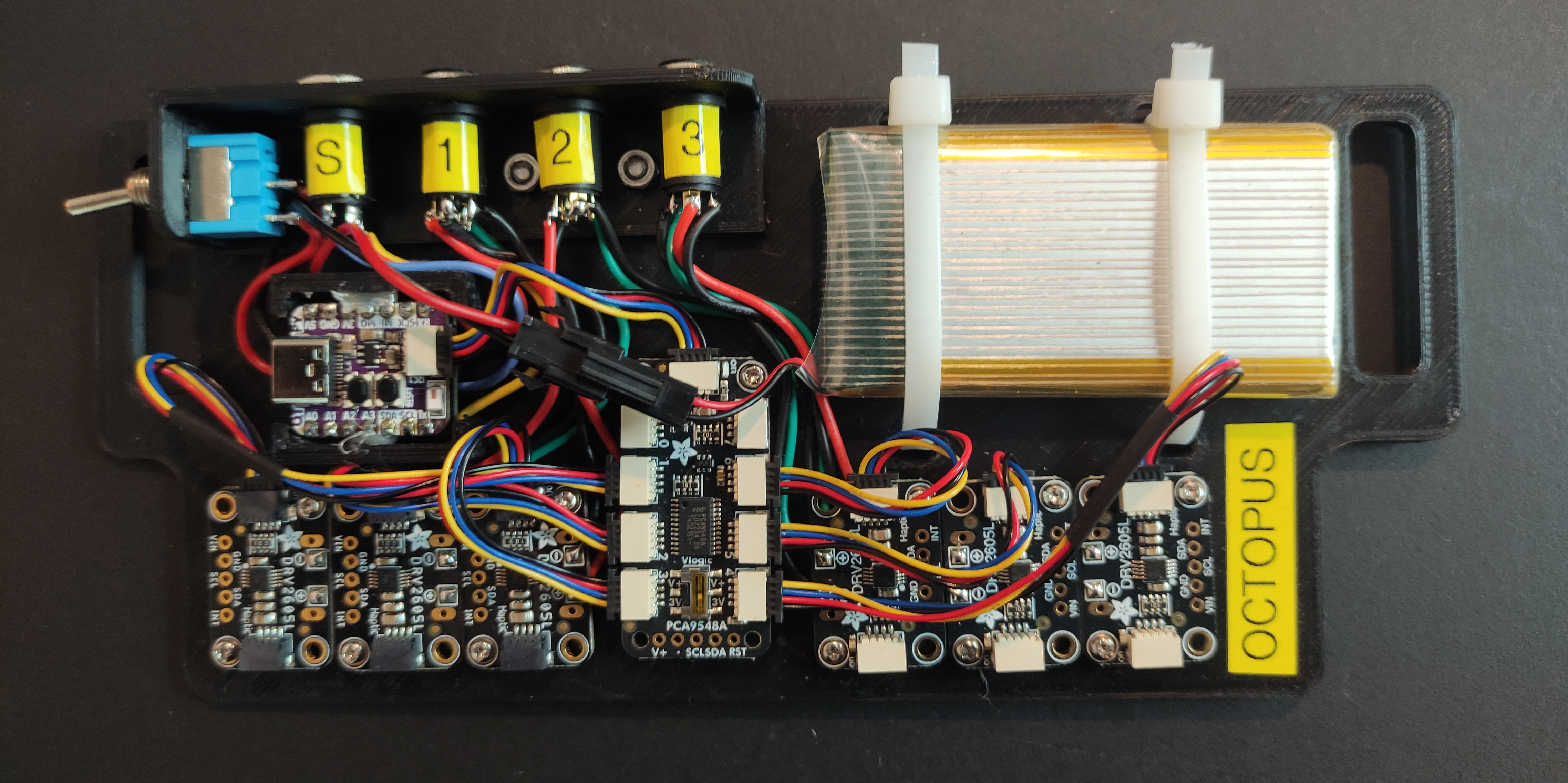

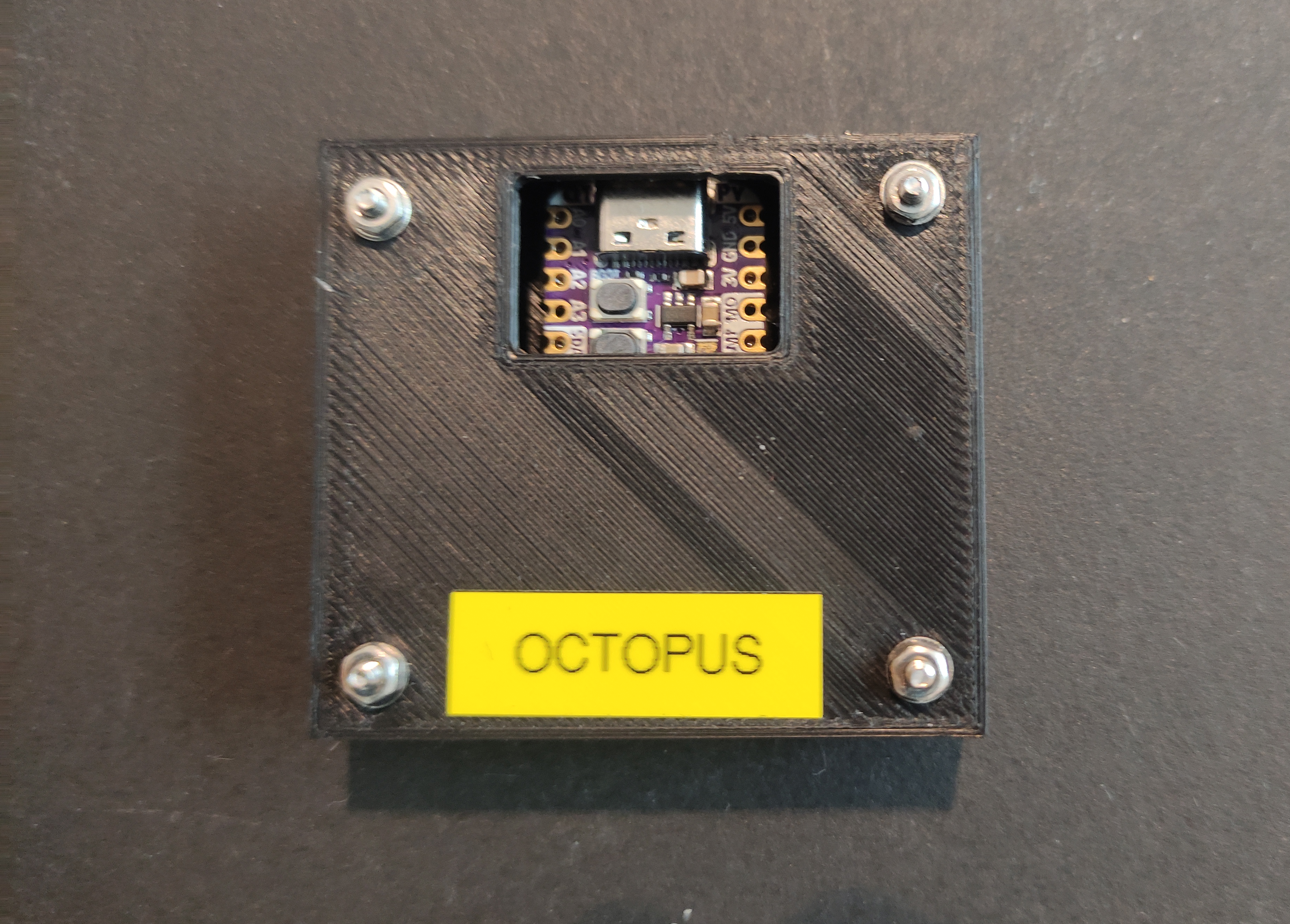

“Octopus” is an affordable and simple-to-build, autonomous portable technology providing input and output for interfacing physically with data displays in Python programming language. The system consists of two modules: the translator (Fig. 2) and the communication node (Fig. 4). Seven “tentacles” can be attached to the communication node, one of which acts as an input and 6 as output (Fig. 1). As input, an Inertial Measurement Unit (IMU) provides 3-D orientation and acceleration measurements, which enables spatial navigation by tilting the wrist, although any digital sensor capable of I2C communication can be used. The output provides for haptic feedback with 6 channels of linear resonant acceleration (LRA) discs that can be configured and driven independently. The “tentacles” connect to the communication unit with TRRS connectors (Fig. 5). The translator unit connects to a computer via USB and transfers data between the data display and communication node with the OSC protocol over Serial. The data transmission between the communication node and the translator is wireless via the ESPNow protocol. The communication node runs on battery and its autonomy is estimated, depending on the configuration, from 5 to 11 hours. The Python library for data transmission between “Octopus” and data display, code necessary for programming both units, and 3D printing files are open access and available on the project’s page on Github.

“Octopus” is designed on purpose to be versatile and customizable. It serves as an interface that helps connect data with physical exploration, by mapping the data into a physical artifact that can be sculpted into different geometries, enabling an embodied experience. As such, “Octopurs” allows for easy deployment of artifacts for interactive data exploration through tactile engagement. Additionally, “Octopus” is designed to support multiple wearable devices interacting with the same dataset, providing for collaborative data exploration. The configuration of the haptic feedback has three variables: vibrator index, intensity, and duration. The optimal frequency for the vibration of the LRA is the resonant frequency of the system and, it’s defined by the hardware’s physical characteristics.

The development of the project took place in the summer of 2025 at the Intelligent Instruments Lab, University of Iceland, and has been funded by Rannis’ Student Innovation Fund and was be realised in collaboration with the PhD researcher Miguel Angel Crozzoli.

Fig. 1 - Sleeve

Fig. 1 - Sleeve

Fig 2 - Communication Node

Fig 2 - Communication Node

Fig 3 - Communication Node

Fig 3 - Communication Node

Fig 4 - Tranlator

Fig 4 - Tranlator

Fig 5 - Tranlator

Fig 5 - Tranlator

References

[1] Crozzoli, M.A. (2025) “Dark Sonification: an Entangled Post-Interaction Multimodal Data Display System,” in Doctoral Consortium at the sixth decennial Aarhus conference: Computing X Crisis. Aarhus, Denmark. Available at: https://hal.science/hal-05191041 (Accessed: September 22, 2025).

[2] Crozzoli, M.A. and Magnusson, T. (2025) “Data Perceptualization through Affect in Dark Sonification.” Available at: https://doi.org/10.34626/2025_XCOAX_013